This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

Robotic fabrication has traditionally required specialized skills, detailed digital designs, and lengthy production times. In most environments—industrial, commercial, or operational—this limits how quickly custom structures or tools can be produced. For emergency responders and defense units operating in dynamic conditions, the delay between identifying a need and producing a physical solution can be critical.

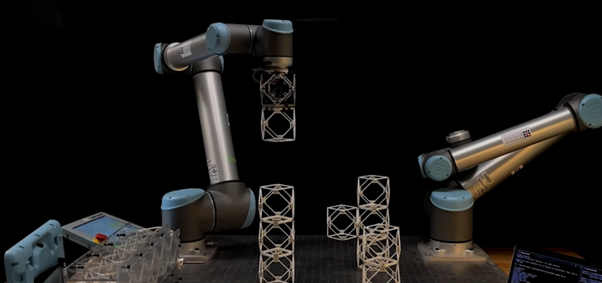

Researchers at MIT have introduced a new approach that attempts to shorten that gap. Their speech-to-reality system enables a robotic arm to construct physical objects directly from a voice prompt, turning spoken requests into modular, assembled structures within minutes. Rather than relying on 3D printing—which can take hours—the system rapidly converts natural-language instructions into actionable fabrication steps.

According to TechXplore, the process begins with speech recognition linked to a large language model, which interprets a user request such as “build a simple stool.” A 3D generative AI model then produces a digital mesh of the requested object. A voxelization algorithm breaks that mesh into discrete components suitable for assembly with the system’s modular building blocks. Additional geometric processing adjusts the design to account for real-world constraints such as stability, connectivity, and allowable overhangs.

Once the digital model is finalized, a planning algorithm determines the order in which pieces should be assembled and maps a collision-free path for the robotic arm. The system has already produced stools, chairs, shelving units, small tables, and decorative pieces in under five minutes.

For defense and homeland-security applications, rapid on-site fabrication could reduce logistical strain in forward environments. Field teams could generate mission-specific structures—temporary work surfaces, UAV launch frames, sensor mounts, replacement parts, or improvised tools—without relying on supply chains or dedicated fabrication units. Because the system uses modular components, these objects can later be disassembled and reused, reducing material waste in austere or remote locations.

The researchers are now working on improving load-bearing capability by upgrading the connectors between modular pieces. They have also demonstrated pathways for deploying the assembly logic on small mobile robots, potentially enabling multi-robot fabrication of larger structures. Future versions may incorporate gesture inputs alongside speech, allowing more natural human-robot interaction.

By merging language models, generative AI, and robotic assembly, the speech-to-reality system illustrates a new direction for fast, accessible, and adaptable fabrication—expanding how both civilian and security organizations might create physical objects on demand.

The research was published here.