This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

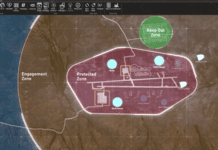

AI is increasingly found in everyday surveillance cameras and software. AI-powered video technology has been playing a growing role in tracking faces and bodies through stores, offices, and public spaces. In some countries, the technology constitutes a powerful new layer of policing and government surveillance.

New research has shown that it is possible to hide from an AI video system with the aid of a simple color printout. Researchers from Ku Leuven University, Belgium presented an approach to generate adversarial patches to targets with lots of intra-class variety, namely persons. They showed that the image they designed can hide a whole person from an AI-powered computer-vision system, a person detector. They demonstrated it on a popular open-source object recognition system called YoLo(v2).

The trick could conceivably let crooks hide from security cameras, or offer dissidents a way to dodge government scrutiny. Intruders can sneak around undetected by holding a small cardboard plate in front of their body aimed towards the surveillance camera, according to arxiv.org/abs.

“What our work proves is that it is possible to bypass camera surveillance systems using adversarial patches,” says Wiebe Van Ranst, one of the authors.

Adapting the approach to off-the-shelf video surveillance systems shouldn’t be too hard, says Van Ranst. “At the moment we also need to know which detector is in use. What we’d like to do in the future is generate a patch that works on multiple detectors at the same time,” he told MIT technologyreview.com.

The technology exploits what’s known as adversarial machine learning. Most computer vision relies on training a (convolutional) neural network to recognize different things by feeding it examples and tweaking its parameters until it classifies objects correctly.

By feeding examples into a trained deep neural net and monitoring the output, it is possible to infer what types of images confuse or fool the system.