This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

Browser extensions have long been treated as harmless productivity tools, but their growing access to sensitive data is becoming a serious security concern. As more users rely on AI chatbots for work-related research, coding, and decision support, the content of those conversations is increasingly valuable. That makes AI chat interfaces an attractive target for silent data collection—often without users realizing anything is wrong.

Security researchers have now uncovered two Chrome extensions that were doing exactly that. Marketed as AI sidebars and chatbot helpers, the extensions secretly collected conversations from ChatGPT and DeepSeek, along with users’ browsing activity, and sent the data to external servers controlled by unknown operators. Together, the two add-ons were installed by more than 900,000 users before the activity was exposed.

According to The Hacker News, the mechanism was relatively simple but effective. After installation, users were asked to approve what appeared to be standard permissions for anonymous analytics. In reality, the extensions monitored open browser tabs and directly extracted chatbot conversations by scanning the structure of AI chat web pages. The captured content was stored locally and then transmitted at regular intervals to remote command-and-control servers. This technique, now referred to by researchers as “prompt poaching”, allows attackers to harvest entire AI interactions rather than isolated snippets.

What makes the issue more troubling is how difficult it can be to detect. The extensions closely imitated a legitimate AI tool with a large user base and were distributed through the official Chrome Web Store. Infrastructure, such as privacy policies and support pages, was hosted using modern AI-assisted web platforms, adding another layer of credibility and making the operation harder to spot.

Beyond individual privacy, the broader implications are significant. AI chats often contain sensitive material: internal documents, code, business strategies, operational questions, and confidential URLs. Once exfiltrated, this information can be repurposed for phishing, corporate espionage, identity theft, or sold to third parties. Researchers warned that organizations may have unknowingly exposed proprietary or regulated data simply through everyday AI use.

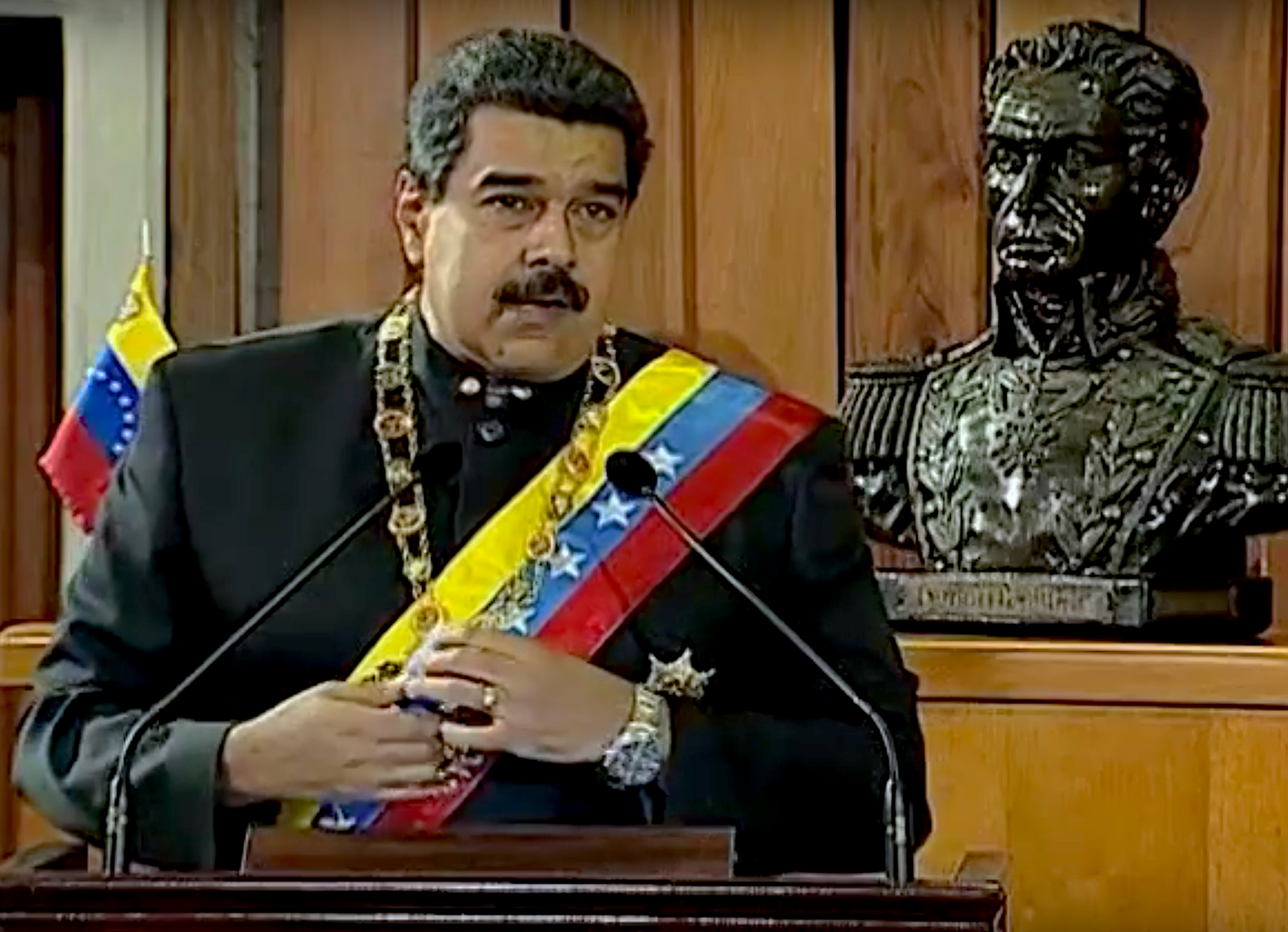

From a defense and homeland security perspective, the risk is even sharper. Military, government, and critical infrastructure personnel increasingly use AI tools for analysis and drafting. Compromised browser extensions could leak operational concepts, internal terminology, or system details without triggering traditional security alerts. This places browser add-ons squarely within the modern attack surface.

The findings also highlight a gray area: some legitimate extensions openly collect AI interaction data for analytics, raising questions about transparency, consent, and platform oversight. As AI becomes embedded in daily workflows, securing the tools that surround it may prove just as important as securing the AI systems themselves.