This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

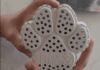

Meta Reality Labs has unveiled a groundbreaking new resource for machine learning researchers—the HOT3D dataset—designed to enhance robotic interaction with humans and objects. This open-source dataset, focusing on 3D tracking of hand-object interactions, aims to accelerate advancements in robotics, augmented reality (AR), and virtual reality (VR) systems. By providing high-quality, ego-centric 3D videos of humans performing a range of manual tasks, HOT3D could significantly improve robotic manipulation capabilities.

The HOT3D dataset, presented in a paper on arXiv, contains over 833 minutes of video footage, totaling more than 3.7 million images, according to TechXplore. It includes multi-view RGB and monochrome image streams, capturing 19 individuals as they interact with 33 different objects. The data encompasses essential multi-modal signals such as eye gaze, scene point clouds, and highly detailed annotations, including 3D poses of hands, objects, and cameras, along with 3D models of hands and objects. This rich set of information allows researchers to create more accurate machine-learning models for hand-object interactions.

Key tasks within the dataset include simple actions like picking up and placing objects, as well as more complex behaviors observed in real-world settings such as using kitchen utensils and typing on a keyboard. These diverse tasks, captured from the user’s perspective, provide a comprehensive look at the intricacies of hand-object interactions in everyday environments.

The dataset was compiled using two of Meta’s advanced devices: the Project Aria glasses and the Quest 3 VR headset. Project Aria glasses track video, audio, and eye movements, enabling the collection of precise data on users’ field of view and object locations. The Quest 3 headset, a commercially available VR device, further contributes by capturing immersive VR interactions.

In tests, researchers demonstrated that training models using the multi-view HOT3D data yielded better results than single-point perspective datasets in three critical tasks: 3D hand tracking, 6DoF object pose estimation, and 3D lifting of in-hand objects. These findings suggest that HOT3D has significant potential to improve the development of robots, human-machine interfaces, and other computer vision applications.

The HOT3D dataset is now publicly available for download on the Project Aria website, offering an invaluable tool for researchers worldwide working on advancing robotic and computer vision technologies.