This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

Computer vision is a type of artificial intelligence that allows computers to interpret images and videos. Many law enforcement and public safety organizations already use the tech to investigate crimes, monitor critical infrastructure and secure major events that could be a target for terrorists. An early version of the tech was used to identify the perpetrators of the Boston Marathon bombing in 2013, for instance.

The US intelligence community’s research arm wants to train algorithms to track people across sprawling video surveillance networks, and it needs more data to do it. The data used to train algorithms today is fairly narrow, which limits the tech’s ability to dissect the wide range of situations they’d see in the real world.

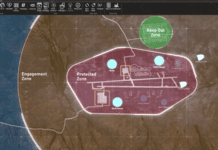

The Intelligence Advanced Research Projects Activity (IARPA) is recruiting teams to build bigger, better datasets to train computer vision algorithms that would monitor people as they move through urban environments. The training data would improve the technology’s ability to link together footage from a large network of security cameras, allowing it to better track and identify potential targets.

With the new datasets, officials aim to improve the training process and enable computer vision systems to connect footage shot from cameras positioned across a broad geographic area. “Further research in the area of computer vision within multi-camera video networks may support post-event crime scene reconstruction, protection of critical infrastructure and transportation facilities, military force protection, and in the operations of National Special Security Events,” according to IARPA’s solicitation.

Selected vendors would compile roughly 960 hours of video footage covering numerous different environments and scenarios. The dataset must include footage from at least 20 different security cameras with “varying positions, views, resolutions and frame rates” scattered across roughly 2.5 acres of “urban or semi-urban space.” The videos would be shot all hours of the day and in different weather conditions, and include pedestrians, moving vehicles, street signs and more.

Ultimately, these are the people the algorithms would focus on to sharpen their identification and tracking skills, according to nextgov.com.