This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

An autonomous navigation system for small, light drones inspired by insects combines visual homing (using visual cues for orientation) with odometry (measuring distance in a specific direction) for navigation.

A team of engineers from TU Delft say that this method could enable robots to go vast distances and return home with minimal computation and memory (0.65 kb per 100 meters). “In the future, tiny autonomous robots could find a wide range of uses, from monitoring stock in warehouses to finding gas leaks in industrial sites,” they said in a statement.

According to Interesting Engineering, tiny robots hold significant potential for many real-world applications, for many reasons: their lightweight design ensures safety even in case of collision, and they can utilize their small size to navigate narrow areas. If they can be produced affordably, they can even be deployed in large numbers and efficiently cover vast areas (could be used in greenhouses for early pest or disease detection).

However, their smaller size means they have limited resources, which makes autonomous operation challenging, especially when it comes to navigation. GPS can aid outdoor navigation but is ineffective indoors and inaccurate in cluttered environments, while indoor wireless beacons are expensive and impractical in search-and-rescue cases, for example.

The researchers explain that most AI for autonomous navigation is designed for large robots and uses heavy power-intensive sensors that do not fit tiny robots. Other vision-based approaches are power-efficient but require creating detailed 3D maps, which demand substantial processing power and memory beyond the capacity of small robots.

To solve these problems, the researchers looked to nature and took inspiration from insects that combine odometry (tracking motion) with visually guided behaviors (view memory). The “snapshot” model means that insects like ants periodically capture snapshots of their surroundings.

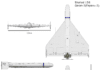

They developed a bio-inspired approach that combines visual homing (that directs orientation in relation to visual cues in the environment) with odometry (that measures the distance traveled along a specific direction). They then tested this method in various indoor conditions using a 56-gram Crazyflie Brushless drone with a panoramic camera, microcontroller, and 192 kB of memory.

They found that the approach was incredibly memory-efficient because of the pictures’ high compression and precise spacing. Their proposed strategy is less versatile than state-of-the-art methods as it lacks mapping capability, but it does enable a return to the starting point, which is useful for many applications (drones could fly out, collect data, and then return to the base station for applications like crop monitoring in greenhouses and warehouse stock tracking).