This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

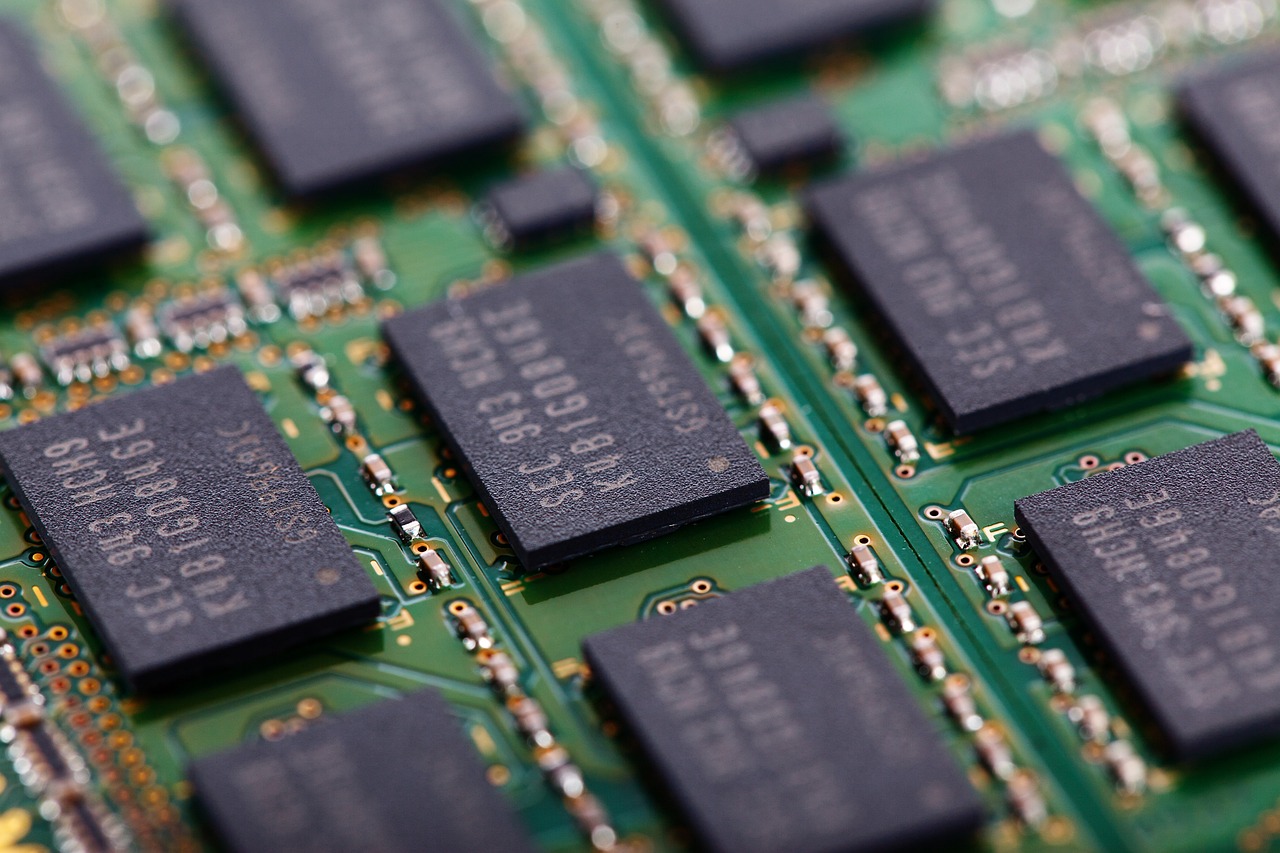

AI accelerators are a class of hardware that are designed, as the name suggests, to accelerate AI models. By boosting the performance of algorithms, the chips can improve results in data-heavy applications like natural language processing or computer vision. As AI models increase in sophistication, however, so does the amount of power required to support the hardware that underpins algorithmic systems.

IBM’s research department has been working on new designs for chips that are capable of handling complex algorithms without growing their carbon footprint, i.e. their total greenhouse gas emissions. The major challenge is to come up with a technology that doesn’t require exorbitant energy, but without trading off compute power. The company has designed what it claims to be the world’s first AI accelerator chip that is built on high-performance seven-nanometer technology, while also achieving high levels of energy efficiency. The four-core chip, still at the research stage, is expected to be capable of supporting various AI models and of achieving “leading-edge” power efficiency.

Prepared to dive into the world of futuristic technology? Attend INNOTECH 2023, the international convention and exhibition for cyber, HLS and innovation at Expo, Tel Aviv, on March 29th-30th

Interested in sponsoring / a display booth at the 2023 INNOTECH exhibition? Click here for details!

The new chip is highly optimized for low-precision training. It is the first silicon chip to incorporate an ultra-low precision technique called the hybrid FP8 format – an eight-bit training technique developed by Big Blue, which preserves model accuracy across deep-learning applications such as image classification, or speech and object detection.

Equipped with an integrated power management feature, the accelerator chip can maximize its own performance, for example by slowing down during computation phases with high power consumption. The chip also has high utilization, with experiments showing more than 80% utilization for training (learning a new capability from data) and 60% utilization for inference (applying a capability to new data) – far more, according to IBM’s researchers, than typical GPU utilizations which stand below 30%.

This translates, once more, in better application performance, and is also a key part of engineering the chip for energy efficiency, according to zdnet.com. It is expected that applications would include large-scale deep-training models in the cloud ranging from speech-to-text AI services to financial transaction fraud detection. Applications at the edge, too, could find a use for the new technology, with autonomous vehicles, security cameras and mobile phones all potentially benefiting from highly performant AI chips that consume less energy.

Prepared to dive into the world of futuristic technology? Attend INNOTECH 2023, the international convention and exhibition for cyber, HLS and innovation at Expo, Tel Aviv, on March 29th-30th

Interested in sponsoring / a display booth at the 2023 INNOTECH exhibition? Click here for details!