This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

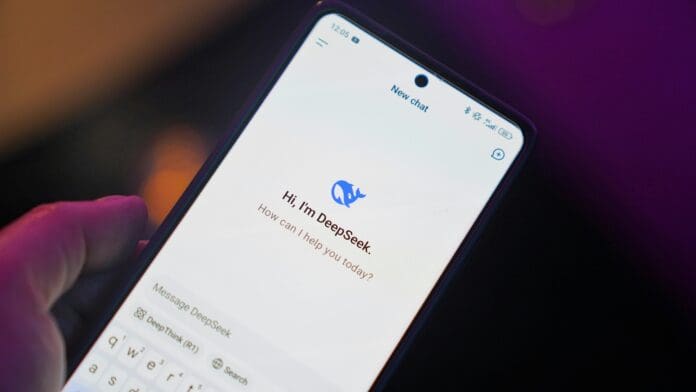

A recent development in artificial intelligence suggests machines may be getting closer to independent reasoning. Researchers from DeepSeek AI have showcased that their R1 model was trained to solve complex problems without step-by-step human instruction—marking a shift in how reasoning capabilities are taught to AI.

Published in Nature, the study outlines how the R1 model learned to tackle advanced tasks in mathematics, science, and programming using reinforcement learning rather than traditional supervised methods. Instead of being shown countless examples of how to work through a problem, the model was trained through trial and error, receiving feedback only on whether its final answer was correct.

This method mirrors how humans often learn: not by memorizing every step, but by experimenting, checking results, and adjusting strategies. During training, R1 began demonstrating behaviors like verifying its own solutions and choosing different approaches to reach the correct outcome. In some cases, it even used cues such as “wait” as it internally assessed its reasoning, according to TechXplore.

What makes this approach significant is that it avoids some of the downsides of conventional training methods, such as human bias or over-reliance on predefined solutions. Human involvement in the R1 project was minimal and limited to refining its abilities after the core training was complete.

Performance testing showed promising results. On the 2024 American Invitational Mathematics Examination (AIME)—a notoriously difficult test aimed at high-achieving high school students—R1 scored 86.7% accuracy, surpassing previous models that relied heavily on human-labeled data.

Despite the strong results, researchers acknowledge the system still has flaws. In certain situations, R1 overcomplicated simple tasks or switched languages mid-task when prompted in non-English text. These issues highlight the ongoing challenges of developing general-purpose, autonomous reasoning systems.

Still, the ability of R1 to self-learn problem-solving skills points toward a potential shift in AI development. If refined further, models trained through reinforcement learning could form the foundation for next-generation AI systems that are more adaptable, autonomous, and capable of reasoning independently in real-world scenarios.