This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

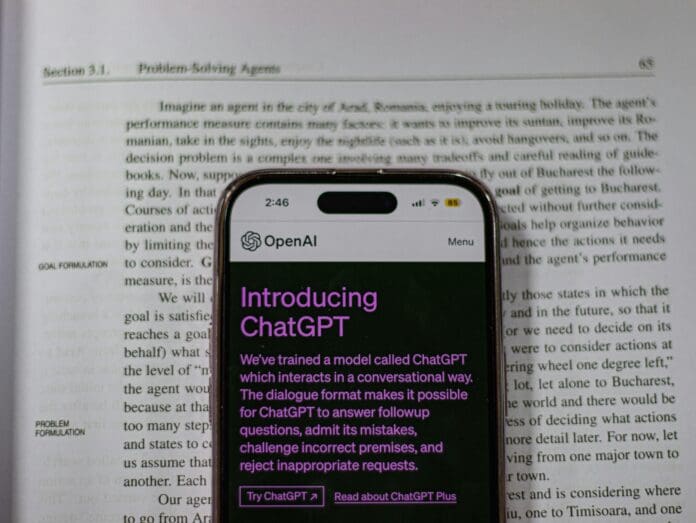

OpenAI has recently banned several accounts from users in China and North Korea, citing concerns that its technology was being used for harmful purposes, including surveillance and manipulating public opinion. The artificial intelligence company disclosed these actions in a report in late February, emphasizing the potential risks of authoritarian regimes exploiting AI tools for geopolitical and domestic control.

According to OpenAI, these operations were detected using its own AI-driven tools designed to monitor misuse of its platform. While the company did not specify the exact number of banned accounts or the time frame over which the removals took place, it did highlight several notable incidents of misuse.

According to Reuters, one example involved users from China who employed OpenAI’s ChatGPT to generate articles in Spanish, which criticized the United States. These articles were then published by major media outlets in Latin America. This was viewed as an attempt to spread anti-U.S. propaganda to foreign audiences.

In another instance, North Korean-linked individuals were reportedly using ChatGPT to create false resumes and online profiles, targeting Western companies with the aim of securing fraudulent employment opportunities, potentially for espionage or other illicit purposes.

Additionally, OpenAI detected a set of accounts believed to be associated with a financial fraud operation originating from Cambodia. These accounts used the platform’s technology to translate content and generate posts on social media platforms like X (formerly Twitter) and Facebook, likely to support deceptive financial schemes.

As AI technology continues to evolve, OpenAI’s decision to remove malicious accounts serves as a reminder of the growing need for robust safeguards in the digital age. The company’s proactive approach highlights the importance of ethical AI deployment and the role tech companies must play in preventing misuse. As AI’s influence expands, governments and organizations must collaborate to establish clearer regulations and countermeasures to protect against its weaponization by malicious actors. This development also underscores the delicate balance between innovation and security in an increasingly interconnected world.