This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

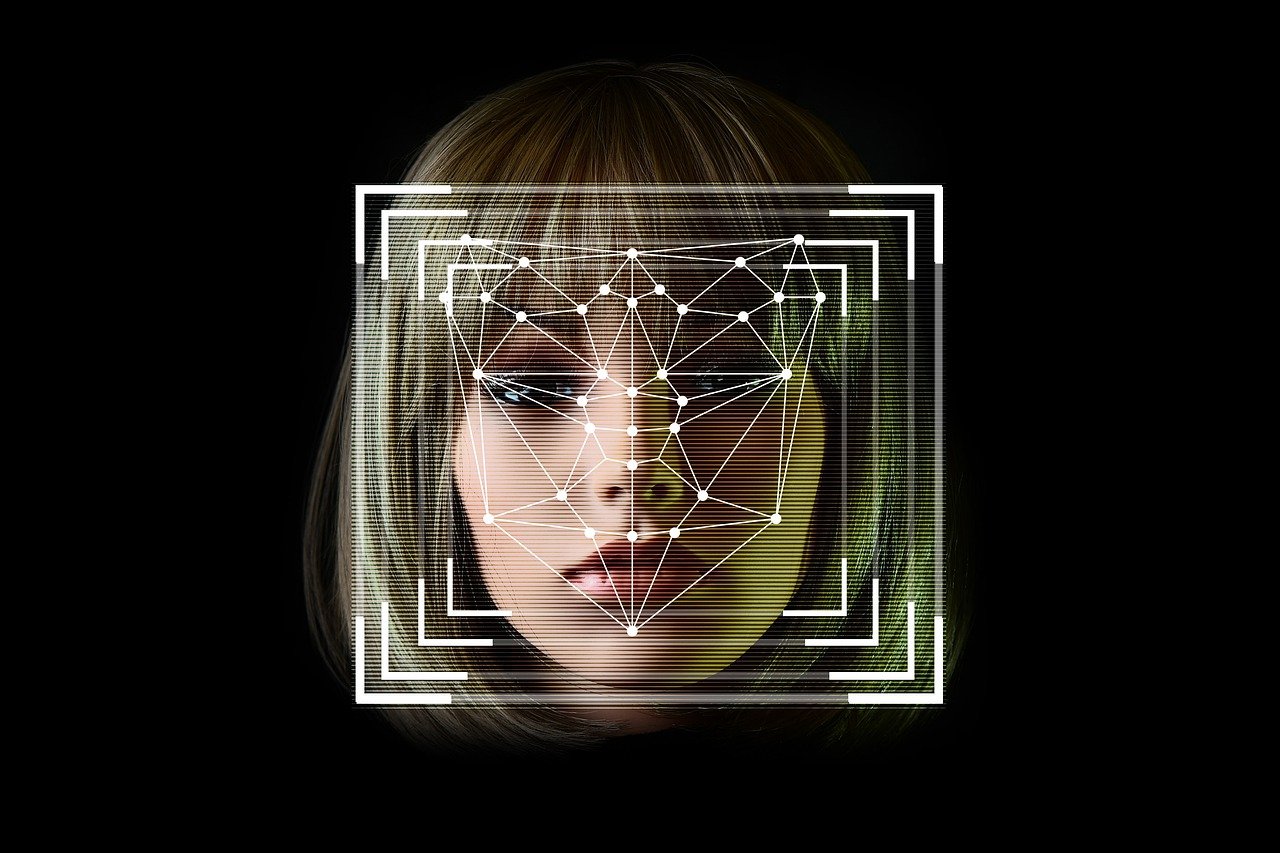

Deepfake technology, the generation of fake images, videos, and sounds that seem completely authentic and can confuse even expert viewers and listeners, has been gaining both popularity and innovation. It has brought with it many issues and has proven capable of influencing important aspects of our lives, like our politics.

This “new era” of sophisticated deception sees forged images and videos making headlines, and though they are often identified quickly, the damage can sometimes already be done by the time they are taken down, especially in this age of online virality.

According to Techxplore, digital manipulations that can either alter or completely synthesize human faces are contributing to fake news and poisoning public trust in digital media – recent research that was published in the “International Journal of Autonomous and Adaptive Communications Systems” describes a new approach that can identify whether media is a deepfake by spotting illumination inconsistencies within images.

The researchers from the University of Information Science and Technology in China explain that deepfakes can be classified into four main types, each posing a different threat: identity swaps, expression swaps, attribute manipulations, and entire face synthesis.

They emphasize that the most dangerous types are “identity swaps” (where a person’s face is imposed on another’s) and “expression swaps” (which transfer facial expressions from one individual to another), and add that deepfakes, in general, can cause serious harm to the reputation and perception of the person being “deepfaked”.

When it comes to detection, the usual approach is “binary classification”, but it can fail if the video or images are highly compressed or of poor quality that obfuscates facial features and reduces the chance of detection. However, even experts in deepfake generation can struggle with matching the lighting perfectly to the original media – an issue the researchers focused on in their detection method, which uses a neural network to spot illumination discrepancies.