This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

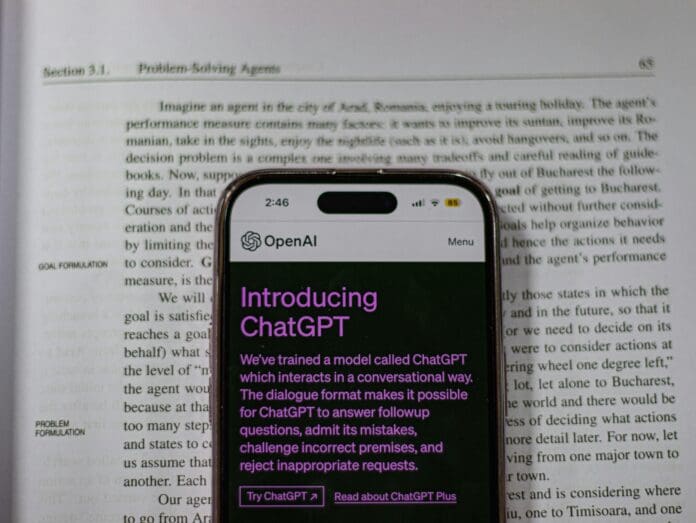

OpenAI is moving toward a bold new direction in AI personalization—envisioning a model that can retain and reason over a person’s entire digital footprint. At a recent AI event, OpenAI CEO Sam Altman shared plans for expanding ChatGPT’s capabilities far beyond simple dialogue, aiming for a system that understands users deeply by continuously learning from their life data.

The goal, as outlined during the discussion, is to build an AI assistant with access to “trillions of tokens” of context. This would include every prior conversation, emails, documents, books, browsing history, and external data sources connected by the user. In effect, the model would create a continuously updated context window that mirrors a person’s entire life experience.

Altman explained that younger users are already paving the way for this transformation. Many university students reportedly treat ChatGPT as a personal operating system—uploading class materials, linking databases, and using sophisticated prompts to drive academic and personal decisions. For them, the assistant functions more as a trusted life advisor than a simple chatbot.

This vision aligns with current development trends in Silicon Valley, where AI-powered autonomous agents are beginning to handle routine tasks. Future iterations of such systems could, for example, manage logistics, schedule appointments, or even make online purchases based on a user’s preferences and routines.

However, this hyper-personalized AI model also raises complex questions about data security and privacy. While the benefits of a truly context-aware assistant are clear, the trade-offs involve handing over vast amounts of personal data to a centralized platform. Critics warn that such data could be misused, either for commercial targeting or to align with political or ideological biases.

Recent issues with overly agreeable chatbot responses and ideological inconsistencies have already highlighted the potential pitfalls. Despite ongoing model improvements, large AI systems remain susceptible to hallucinations and unexpected behavior.

The promise of an AI that offers intelligent, context-aware guidance is compelling. But as these systems become more embedded in daily life, their design will need to balance utility with robust safeguards around trust, transparency, and user control.