This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

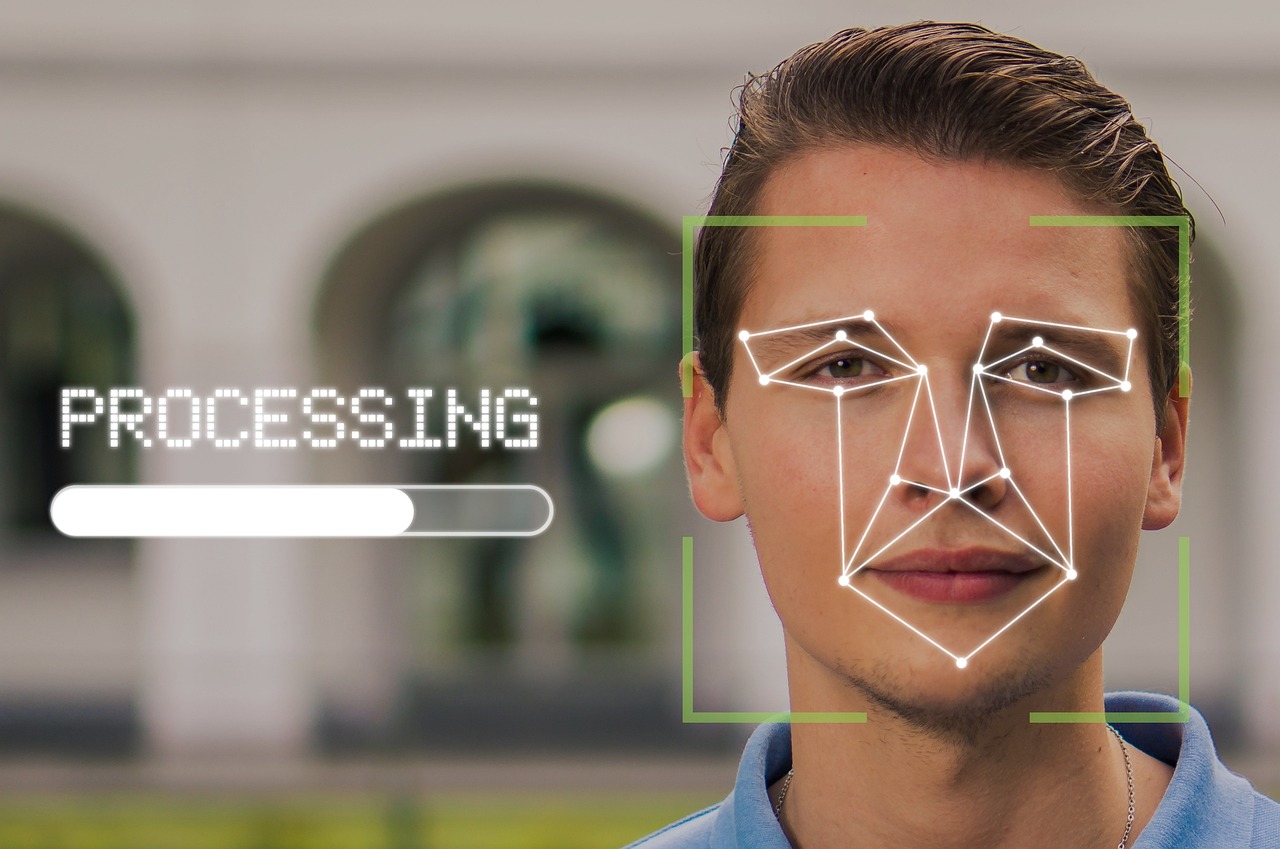

Racially biased artificial intelligence systems are not only misleading but can be detrimental and destroy people’s lives. A press release by University of Alberta Faculty of Law assistant professor Dr. Gideon Christian warns of the possibilities.

Christian, who is considered an expert on AI and the law, received a grant for a research project called Mitigating Race, Gender and Privacy Impacts of AI Facial Recognition Technology, and leads an initiative to study race issues in AI-based facial recognition technology in Canada.

He warned that facial recognition technology is particularly damaging to people of color, and explained: “Technology has been shown (to) have the capacity to replicate human bias. In some facial recognition technology, there is over 99 percent accuracy rate in recognizing white male faces. But, unfortunately, when it comes to recognizing faces of color, especially the faces of Black women, the technology seems to manifest its highest error rate, which is about 35 percent.”

He further warns that facial recognition technology might wrongly match a person’s face with that of a criminal, so someone might be arrested for a crime they never committed.

Christian explained that technology is never inherently biased but that the data used to train machine learning algorithms is to blame. The tech will produce results according to what it is fed. He claims that in many ways this is an old problem pretending to be new, and warns that if not addressed it could reverse years of progress.

“Racial bias is not new,” he noted. “What is new is how these biases are manifesting in artificial intelligence technology. And this technology — and this particular problem with this technology, if unchecked — has the capacity to overturn all the progress we achieved as a result of the civil rights movement.”

This information was provided by Interesting Engineering.