This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

As artificial intelligence models become increasingly complex, the demand for greater computational power is outpacing the capabilities of traditional digital systems. A recent study published in Nature points to a possible solution: physical neural networks that process information using the inherent properties of light.

The research, a joint effort between institutions including Politecnico di Milano, EPFL, Stanford University, the University of Cambridge, and the Max Planck Institute, explores how analog systems can be trained to perform AI tasks using photonic hardware. These systems bypass the limitations of standard digital computation by carrying out mathematical calculations directly through light interference on miniature silicon chips.

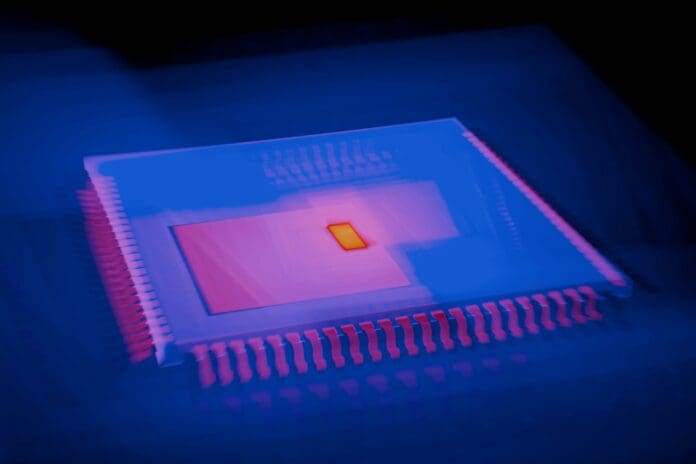

According to TechXplore, at the center of this approach are integrated photonic microchips—tiny chips capable of performing complex calculations at high speed and with low energy consumption. Unlike conventional processors, which require information to be digitized and stored in memory, photonic chips process data using light signals alone. This eliminates the need for conversion steps that typically slow down performance and increase energy use.

The study focuses on a critical phase of AI development: training the network to perform specific tasks. Researchers have developed an “in-situ” training method for photonic neural networks, meaning the learning process happens directly within the physical system using only optical signals. This allows the training phase to be faster and potentially more stable.

One of the key implications of this technology is its potential to enable real-time AI processing directly at the edge—within autonomous vehicles, wearable sensors, or other embedded systems. Because photonic chips are compact and efficient, they can be integrated into devices where traditional AI hardware would be too bulky or power-hungry.

This work marks a step toward making artificial intelligence not only more powerful but also more sustainable, particularly as the industry grapples with the growing energy demands of large-scale AI deployments.