This post is also available in:

עברית (Hebrew)

עברית (Hebrew)

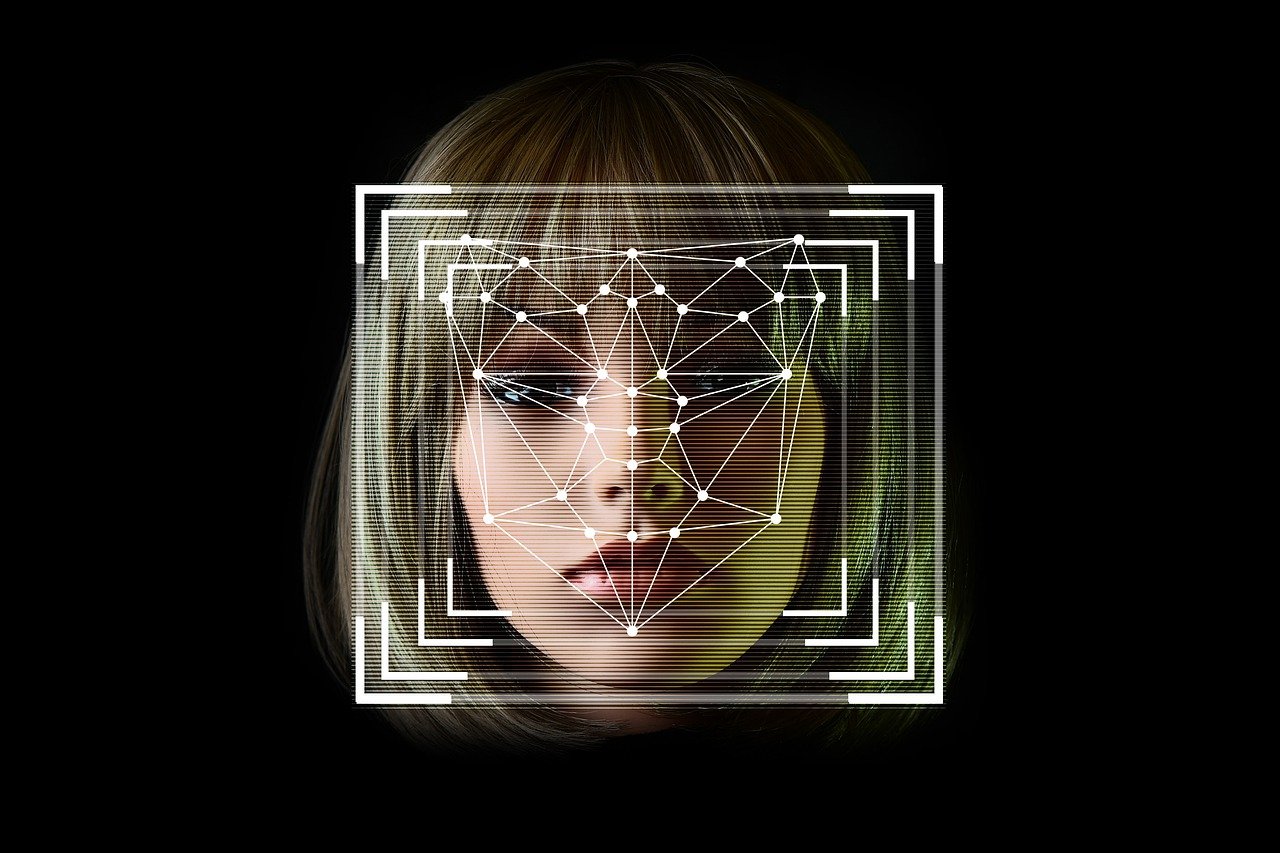

Are biometric authentication measures no longer safe? Biometric authentication expert says deepfake videos and camera injection attacks are changing the game.

Biometrics authentication is getting more and more popular due to it being fast, easy, and smooth for the user, but Stuart Wells, CTO at biometrics authentication company Jumio, thinks this may be risky.

With the speedy rise of artificially generated online content, there is a growing challenge for organizations to accurately determine the true identities of users.

Wells wrote in a post on Biometric Update that fraudsters can use a technique called “camera injection” to insert deepfake videos into the system and trick biometric tools.

According to Cybernews, camera injection is when a malicious actor bypasses a camera’s charged-coupled device (CCD) to inject pre-recorded content, a real-time face swap video stream, or completely fabricated deepfake content.

The main problem with this method of attack is that the hackers can go undetected by the user, and if they pass the verification, they can cause substantial damage by stealing identity, making fake accounts, or making transactions through the device.

So how does one keep safe from such an attack?

Wells states that the key to safeguarding against this type of attack involves implementing mechanisms to identify instances of compromised camera device drivers and recognizing manipulation through forensic examination of video streams.

It is possible to differentiate between real and fake videos by comparing the motion in the video to natural motion. Natural movements like eye movements, changes in facial expressions, or typical blinking rhythm happen organically, and when they are absent it can indicate that a video is fake. There are also indicators in on the technical side of things since changes in parameters like ISO, aperture, frame rate, resolution, and change of light or color intensity can indicate a fake.

Another method is a built-in accelerometer, which senses an axis-based motion and could be used to track the changes in the objects in the recorded video and determine if the camera was indeed hacked.

Furthermore, forensic analysis of individual video frames can also reveal signs of manipulation, like double-compressed parts of the image or traces of computer-generated deepfake images.